This is a guest post on Authoritative-ness and Attributes by Dr. Peter Alterman. Peter is the Senior Advisor to the NSTIC NPO at NIST, and a thought leader who has done pioneering and award-winning work in areas ranging from IT Security and PKI to Federated Identity. You may not always agree with Peter, but he brings a perspective that is always worth considering. [NOTE: FICAM ACAG WG has not come to any sort of consensus on this topic yet] - Anil John

As I have argued in public and private, I continue to believe that the concept of assigning a Level of Assurance to an attribute is bizarre, making real-time authorization decisions even more massively burdensome than they can be, and does nothing but confuse both Users and Relying Parties.

The Laws of Attribute Validity

The simple, basic logic is this: First, an attribute issuer is authoritative for the attributes it issues. If you ask an issuer if a particular user’s asserted attribute is valid, you’ll get a Yes or No answer. If you ask that issuer what the LOA of the user’s attribute is, it will be confused – after all, the issuer issued it. The answer is binary: 1 or 0, T or F, Y or N. Second, a Relying Party is authoritative for determining what attributes it wants/needs for authorization and more importantly, it is authoritative for deciding what attribute authorities to trust. Again the answer is binary: 1 or 0, T or F, Y or N. Any attribute issuer that is not authoritative for the attribute it issues should not be trusted and any RP that has no policy on which attribute providers OR RESELLERS to trust won’t survive in civil court.

Secondary Source Cavil

“But wait,” the fans of attribute LOA say, what if you ask a secondary source if that same user’s attribute is valid. This is asking an entity that did not issue the attribute to assert its validity. In this case the RP has to decide how much it trusts the secondary source and then how much it trusts the secondary source to assert the true status of the attribute. Putting aside questions of why one would want to rely on secondary sources in the first place, implicit in this use case is the assumption that the RP has previously decided who to ask about the attribute. If the RP has decided to ask the secondary source, that is also a trust decision which one would assume would have been based on an evaluative process of some sort. After all, why would an RP choose to trust a source just a little bit? Really doesn’t make sense and complicates the trust calculation no end. Not to mention raising the eyebrows of both the CISSO and a corporate/agency lawyer, both very bad things.

Thus, the RP decides to trust the assertion of the secondary source. The response back to the RP from the secondary source is binary and the trust decision is binary. Some Federation Operator (or Trust Framework Providers, take your pick) may be serving as repositories of trusted sources for attribute assertions as a member service and in that case it, too, the RP would choose to trust the attribute sources of the FO/TFP explicitly. If a Federation Operator/TFP chooses not to trust certain secondary sources, it simply doesn’t add them to its white list. Member RPs that choose to trust the secondary attribute sources would do so based upon local determinations, underscoring the role of prior policy implementation.

Either directly or indirectly, an RP or a TFP makes a binary trust decision about which attribute providers to trust, and so the example reduces to the original law.

Transient Validity, aka Dynamic Attributes

Another circumstance where attribute LOA might be considered is querying about an attribute which changes rapidly in real time. One must accept that an attribute is either valid or invalid at the time of query. If temporality is of concern, that is a whole second attribute and a trusted timestamp must be a necessary part of the attribute validation process. A query from an online business to an end user’s bank would want to know if the user had sufficient funds to cover the transaction at the time the transaction is confirmed. At the time of the query the answer is binary, yes or no. It would also need a trusted timestamp that itself could be validated as True or False. That is, two separate attributes are required, one for content and one for time, both of which must be true for the RP to trust and therefore complete the transaction. Even for ephemeral attributes the answer is directly relevant to the state of the attribute at the time of query and that answer is binary, Y or N, the only difference being that a second trusted attribute – the timestamp – is required. The business makes a binary decision to trust that the user has the funds to pay for the purchase at the time of purchase – and of query - and concludes the sale or rejects it. The case resolves back to binary again.

Obscurity of Attribute Source

Admittedly, things can get complicated when the identity of the attribute issuer is obscure, such as US Citizenship for the native-born. However, once again the RP makes an up-front decision about which source or sources it’s going to ask about that attribute. It doesn’t matter what the source is; the point is that the RP will make a decision on which source it deems authoritative and it will trust that source’s assertion of that attribute. In the citizenship example, the RP chooses two sources: the State Department because if the user has been issued a US passport that’s a priori legal proof of citizenship, or some other governmental entity that keeps a record of the live birth in a US jurisdiction, which is another a priori legal proof of citizenship. However, if the application is a National Security RP for example, it might query a whole host of data sources to determine if the user holds a passport from some other nation state. In addition to the attribute sources query, which in this case might get quite complex (certainly enough for the user to disconnect and pick up the phone instead), the application will have to include scripts telling it where to look and what answers to look for. And at the end of the whole process, the application is going to make a binary decision about whether to trust that the user is a US citizen or not and all that intermediate drama again resolves down to the original case, that the RP makes an up-front determination what attribute source or sources to trust, though in this one the RP builds a complicated multi-authority search and weigh process as part of its authorization determination.

RPs That Calculate Trust Scores

Many commercial RPs, especially in the financial services industry, calculate scores to determine whether to trust authentication and/or authorization. In these situations the RP is making trust calculations, weighing and scoring. Yet it is the RP that is calculating on the inputs, not calculating the inputs. It uses the authorization data and the attribute data to make authentication and/or authorization decisions with calculation code that is directly relevant to its risk mitigation strategy. In fact, this begins to look a lot like the National Security version of the Obscurity condition.

In these vexed situations, what the RP is doing is not trusting all attribute providers and calculating a trust decision based upon a previously-determined algorithm in which all the responses from all the untrusted providers somehow are transformed into a trusted attribute. The algorithm seems to be based upon determining a trust decision by using multiple attribute sources to reinforce each other in some predetermined way, and this method reminds me of a calculus problem, that is, integrating towards zero (risk) and perhaps that’s what the algorithm even looks like.

Attribute Probability

Colleagues who have reviewed this [position paper] in draft have pointed out that data aggregators sometimes have fewer high quality (attribute) data about certain individuals, such as young people, and therefore some include a probability number along with the transmitted attribute data. While it may mean that the data carries a level of assurance assertion to the attribute authority, it’s not really a level of assurance assertion to the RP. The RP, again, has chosen to trust a data aggregator as an authoritative attribute source, presumably because it has reviewed the aggregator’s business processes, accepts its model for probability ranking and chooses to incorporate that probability into its own local scoring algorithm or authorization determination process. In other words, the aggregator is deemed authoritative and its probability scoring is considered authoritative as well. This is, yet again, a binary Y or N determination.

Why It Matters

There are compelling operational reasons why assigning assurance levels to attribute assertions, or even asserters, is a bad idea. It’s because, simply, anything that complicates the architecture of the global trust infrastructure is bad and especially bad if that complication is built on top of a failure to distinguish between a data input and local data manipulation. As the example above illuminates, the attribute and asserter(s) are both trusted by the RP application while the extent to which the trusted data is reliable is questionable and thus manipulated. Insisting on scoring the so-called trustworthiness of an attribute asserter is in essence assigning an attribute to an attribute, a trustworthiness attribute. The policies, practices, standards and other dangling elements necessary to deploy attributes with attributes, then interpret them and utilize them, even if such global standardization for all RP applications could be attained, constitutes an unsupportable waste of resources. Even worse, it threatens to sap the momentum necessary to deploy an attribute management infrastructure even as solutions are beginning to emerge from the conference rooms around the world.

QED, Sort of

The Two Laws of Attribute Validity not withstanding, people can – and have - created Rube Goldberg-ian use cases that require attribute LOA and have even plopped them in to a deployed solution (to increase billable hours, one suspects), but they’re essentially useless. I hate to beat this dead horse but each case I’ve listened to reduces to a binary decision. The bottom line is that the RP makes policy decisions up front about what attribute provider(s) to trust or not trust and these individual decisions lead to binary assertions of attribute validity either directly or indirectly through local processing.

:- by Peter Alterman, Ph.D.

RELATED POSTS

What is the minimum set of attributes needed to uniquely identify a person within a system?

What is the minimum set of attributes needed to uniquely identify a person within a system? How will you move the verified attributes from an IdP/CSP/AP to the RP?

How will you move the verified attributes from an IdP/CSP/AP to the RP?

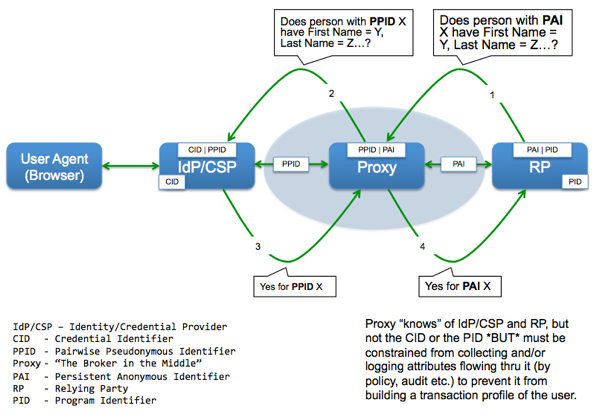

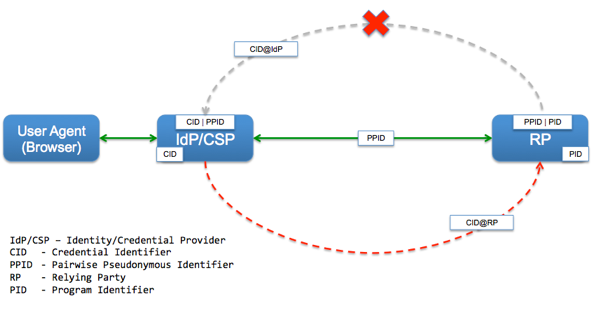

Enrollment is defined here as the process by which a link is established between the (credential) identifier of a person and the identifier used within an RP to uniquely identify the record of that person. For the rest of this blog post, I am going to use the term Program Identifier (PID) to refer to this RP record identifier [A hat tip to our

Enrollment is defined here as the process by which a link is established between the (credential) identifier of a person and the identifier used within an RP to uniquely identify the record of that person. For the rest of this blog post, I am going to use the term Program Identifier (PID) to refer to this RP record identifier [A hat tip to our